Artificial Intelligence is rapidly redefining how businesses manage digital assets, and its integration into an asset tokenization platform is changing how value is created, exchanged, and verified.

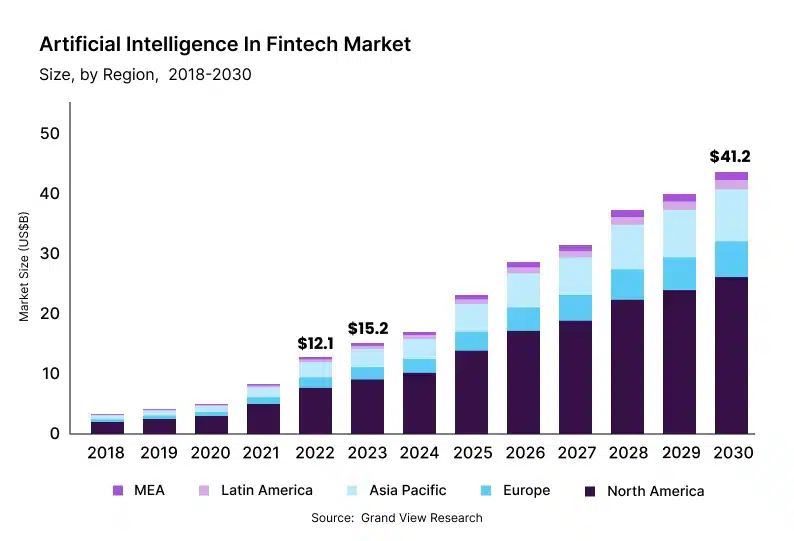

The global tokenization market was valued at USD 2.3 billion in 2021 and is projected to reach USD 5.6 billion by 2026, growing at a CAGR of 19.0%. In addition, the AI in the fintech market is expected to exceed USD 41.16 billion by 2030, advancing at a CAGR of 16.5%.

Together, these trends highlight AI’s ability to streamline valuation, enhance liquidity, automate compliance, and drive stronger ROI across tokenized asset ecosystems.

In this guide, we will discuss why AI is crucial to asset tokenization and the business challenges it solves. We will also sync up on applications of AI in asset tokenization across industries, and the future of AI in asset tokenization.

Why AI is Crucial in Asset Tokenization

AI acts as the foundation of modern asset tokenization platforms, automating critical processes, improving decision accuracy, and creating faster, data-driven ecosystems that enhance overall market efficiency.

1. Intelligent Asset Valuation

AI enhances the accuracy of valuation algorithms by analyzing historical data, market sentiment, and real-time indicators from multiple sources. Its predictive models adapt to shifts in demand, economic conditions, and investor behavior. This ensures tokenized assets are priced reasonably and consistently, thereby building transparency and stronger investor trust within the asset tokenization platform.

2. Predictive Risk Assessment

Machine learning models examine diverse datasets, including credit profiles, market patterns, and geopolitical trends, to anticipate potential risks. These systems detect early signs of market instability or asset manipulation. This proactive intelligence allows asset tokenization platforms to minimize exposure, strengthen governance, and maintain investor confidence across high-value, digitally tokenized portfolios.

3. Automated Compliance and Reporting

AI simplifies complex cross-border compliance frameworks by continuously scanning and interpreting evolving regulatory texts using Natural Language Processing. It automatically updates token issuance protocols, generates audit-ready reports, and flags inconsistencies in real-time. This reduces human error, accelerates compliance approval, and lowers operational costs across global asset tokenization platforms.

4. Liquidity Optimization

AI-driven algorithms identify trading patterns, match orders, and predict liquidity gaps across tokenized markets for tokenization companies. These systems facilitate faster asset transfers and dynamic pricing, minimizing idle capital and enhancing investor participation. The result is a more efficient, liquid, and accessible ecosystem for both institutional and retail participants on asset tokenization platforms.

Key Business Challenges Resolved Through AI-Driven Asset Tokenization

Integrating AI into asset tokenization platforms helps eliminate long-standing inefficiencies in financial operations, ensuring smarter settlements, better compliance management, and greater transparency across complex multi-asset environments.

1. Settlement Delays and High Transaction Costs

Traditional settlements involve manual verification and slow clearing cycles. AI automates these workflows by predicting transaction bottlenecks and executing settlements through smart contracts. This reduces processing times from days to minutes, cutting intermediary costs and improving overall capital efficiency across asset tokenization platforms.

2. Data Reliability and Provenance Tracking

Asset tokenization depends on accurate, verifiable data. AI validates inputs from multiple sources, detects manipulation, and ensures consistent metadata before token issuance. It builds tamper-proof data trails through blockchain integration, enabling investors and regulators to trace every transaction with confidence across both decentralized and centralized financial ecosystems.

3. Inefficient Fund Administration and Collateral Management

Manual fund administration slows decision-making and invites errors. AI automates NAV calculations, reconciles complex portfolios, and monitors collateral values in real-time. By providing instant performance insights, it enables fund managers to make timely, data-backed decisions while improving operational transparency across asset tokenization platforms.

4. Inconsistent Valuation of Illiquid Assets

Valuing assets like art, real estate, or private equity is often subjective. AI models combine market sentiment, historical data, and comparable sales to generate dynamic, fair valuations. These automated insights help stabilize token prices, attract institutional investors, and reduce price discrepancies in illiquid or volatile asset categories.

5. Rising Compliance Costs and Regulatory Burden

Compliance teams face continuous updates to complex financial laws. AI-driven systems analyze regulatory changes across jurisdictions and align token issuance frameworks accordingly. They automate documentation, monitor suspicious activity, and maintain audit trails, reducing compliance costs while ensuring transparency and adherence to local and global regulatory standards.

6. Limited Liquidity in Private and Alternative Markets

Private markets often struggle with mismatches between buyers and sellers and low trading volume. AI-powered trading engines predict optimal trade timing, match orders automatically, and forecast liquidity demands. This enhances secondary market activity, broadens investor participation, and creates a fluid, efficient marketplace for tokenized private and alternative assets.

Strategic Applications of AI in Asset Tokenization Across Industries

AI transforms how asset tokenization platforms function across multiple industries. Here are its applications across different industries:

1. Real Estate

AI refines real estate tokenization by assessing millions of data points, including market trends, tenant reliability, and location dynamics. Predictive models estimate rental yields, forecast appreciation, and detect risk exposure in real time. This allows investors to fractionalize real estate assets with confidence, ensuring transparent valuations and optimized liquidity within the asset tokenization platform ecosystem.

2. Commodities

AI strengthens commodity tokenization by analyzing production patterns, transportation data, and price fluctuations across global supply chains. It verifies the authenticity of the origin and ensures compliance with trade standards. These insights enable real-time tracking and valuation of tokenized commodities like gold, oil, or agricultural products, creating transparency and investor trust in traditionally opaque markets.

3. Carbon Credits

AI brings precision to carbon credit tokenization by validating emission data through satellite imaging, IoT sensors, and environmental databases. It eliminates double-counting and ensures that only verified carbon offsets are digitized. This transparency makes tokenized carbon markets more credible, scalable, and compliant with emerging sustainability reporting frameworks worldwide.

4. Art and Collectibles

AI enhances art tokenization through image recognition, provenance validation, and authenticity scoring. Machine learning models detect counterfeits, assess condition changes, and verify ownership lineage. This reduces forgery risks and enhances asset traceability, allowing investors to trade fractionalized art or collectibles confidently across secure digital marketplaces.

5. Infrastructure and Bonds

AI transforms tokenized infrastructure and bond management by automating performance tracking, risk evaluation, and yield forecasting. It analyzes maintenance data, financial health, and macroeconomic variables to predict project outcomes. This level of precision allows investors to assess long-term viability and make informed decisions with reduced risk exposure in asset-backed markets.

6. Private Credit

AI supports private credit tokenization through advanced credit scoring and borrower analytics. It processes unconventional data, such as transaction histories, revenue projections, and behavioral signals, to evaluate repayment capacity. This data-driven precision improves underwriting standards, expands access to financing, and builds confidence in digital credit marketplaces for both lenders and investors.

The Future of AI in Asset Tokenization

As technology advances, AI in asset management is set to redefine the next era of real word asset tokenization platforms. To stay ahead of this transformation, organizations looking to integrate automation, data intelligence, and advanced analytics should hire AI developers with expertise in blockchain and tokenization technologies.

Here are trends that will shape the future of AI in asset tokenization:

1. Autonomous Agents as Market Participants

AI-driven agents will soon trade assets, manage portfolios, and engage with asset liquidity pools autonomously. These agents can analyze live data, execute trades instantly, and optimize asset allocation without human intervention, ensuring continuous market efficiency and minimizing downtime in tokenized ecosystems.

2. Zero-Latency Execution Across Liquidity Pools

AI will enable tokenized transactions to settle almost instantly by integrating predictive analytics with high-frequency trading mechanisms. These systems synchronize liquidity across decentralized and traditional markets, offering real-time order execution and seamless capital flow without manual coordination delays.

3. Dynamic Market Intelligence Embedded in Assets

Future tokenized assets will incorporate AI modules that track performance, assess risks, and adjust parameters autonomously. These self-learning tokens will monitor economic indicators and regulatory shifts, allowing real-time data synchronization, revaluation and compliance optimization, effectively turning each digital asset into an intelligent financial instrument.

4. Bridging Decentralized and Traditional Finance

AI will bridge data silos between DeFi protocols and traditional financial systems. Through standardized interoperability layers and smart analytics, tokenization in AI will synchronize settlement processes, risk reporting, and asset validation, fostering a unified market infrastructure that improves cross-platform transparency and operational efficiency.

5. Establishing AI as the Core of Tokenized Finance

AI will become the foundation of tokenized financial systems, governing everything from issuance to secondary trading. Its adaptive algorithms will manage liquidity, compliance, and pricing autonomously, creating a responsive financial environment that maximizes efficiency, market transparency, and profitability across the asset tokenization ecosystem.

Conclusion

In deploying an asset tokenization platform powered by AI, organizations unlock greater transparency, faster execution, and enhanced return on investment. The technology addresses core challenges across valuation, administration, liquidity, and regulatory oversight. Looking ahead, it lays the groundwork for tokenized markets that are efficient, resilient, and scalable — building trust among issuers, investors, and regulators alike.

By combining automation, predictive analytics, and intelligent governance, AI not only strengthens market confidence but also reduces systemic inefficiencies that limit growth. As these systems evolve, they will serve as the foundation for a new financial architecture in which tokenized assets move seamlessly, securely, and intelligently across global markets.

Debut Infotech is a reliable asset tokenization development company that provides AI-driven asset tokenization solutions. We build secure, scalable, and fully compliant platforms tailored to diverse asset classes.

With expertise in blockchain architecture, smart contract automation, and AI integration, we empower enterprises to digitize real-world assets, enhance liquidity, and streamline financial operations with unmatched reliability, precision, and innovation.

Our ultimate goal is to drive measurable ROI and long-term success in the evolving world of digital finance.